PRODUCT

Smarter Biometrics, Stronger Digital Identity.

VIEW ALL

product finder

solution

case study

support

Company

Identifying the World. Easily, Securely.

years of solid experience

20+

.jpg)

400+

customised projects

EN

.avif)

%20Is%20Used%20In%20Biometrics.jpg)

Artificial Intelligence (AI) is becoming a new normal in daily life. We are still not close to Sky-net style self-consciousness with world domination purpose; more and more AI based tools, systems are integrating from ink pens to refrigerators.

In Biometrics industry, while ISO, IEC, NIST, STQC or various other organizations are releasing new standards with more security and more AI base approach, there are many researchers trying different AI techniques on biometric identification methods.

Here we try to give a glance how AI is used in popular biometrics methods.

False Acceptance, False Rejection is a long time problem with different skin tones and various face features. Deep Learning and Machine Learning is now very popular among manufacturers for face analysis, which makes it easier and more precise identification. DL and ML proved that it can solve tricky security problems; helps improvement on false acceptance ratio on different demographic groups. With more training, we have seen very successful results in various face attributes. These face biological features represents facial characteristics (skin tone, facial hair etc.) of person.

%20for%20Fingerprint%20Recognition.jpg)

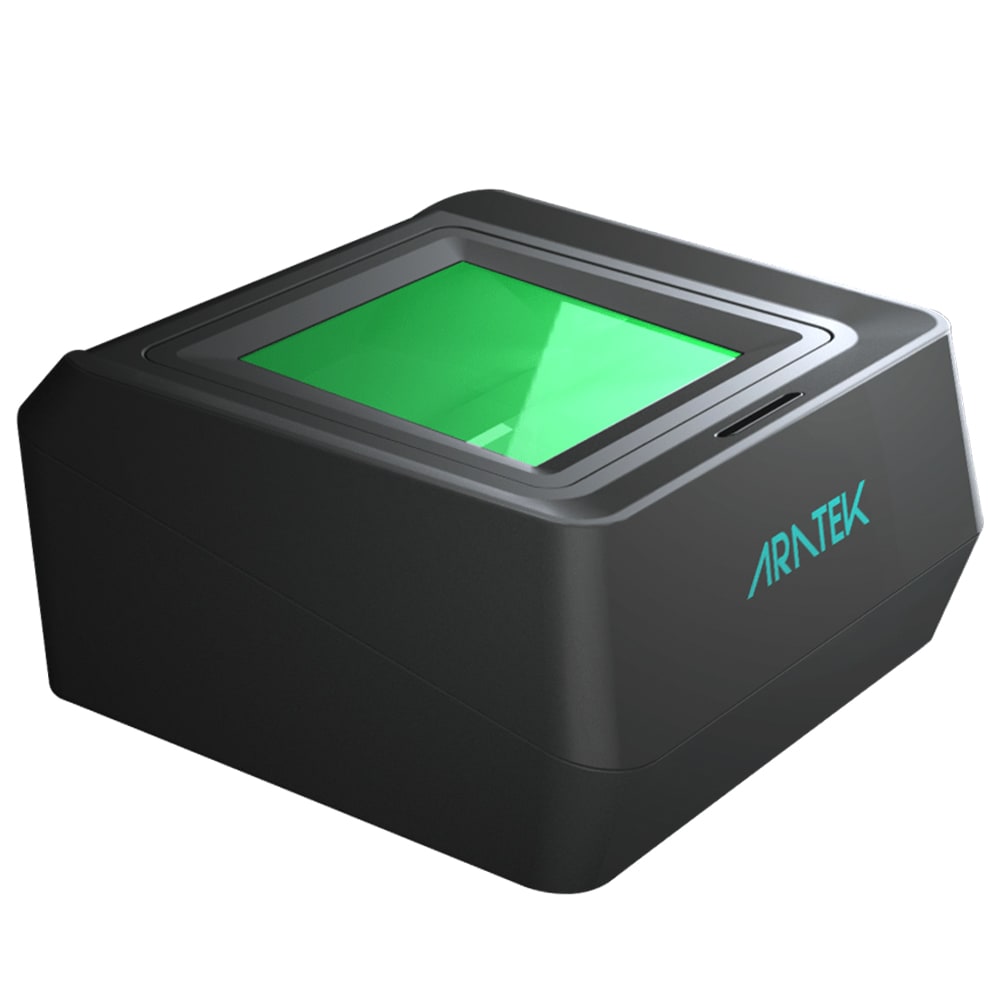

It has been known that some fingerprints are hard to scan or recognize due to skin conditions, damaged fingerprint, scars or small fingerprint surface area. Low quality, old, cropped, damaged images of fingerprints are some other challenges.

Machine learning techniques such as Artificial Neural Networks (ANN), Deep Neural Networks (DNN), Support Vector Machine (SVM) and Genetic Algorithms (GA) play an important part for delivering non-common solutions for fingerprint identification problems.

According to a research report, deep learning, especially Convolutional Neural Network (CNN), has made big success in computer vision and pattern recognition fields, as it doesn’t require special feature extraction. Deep learning automatically learns features and structures under a sufficient number of training data. These advantages of CNNs makes it perfect for various jobs in automatic fingerprint recognition/identification systems: including segmentation, classification, feature extraction (minutiae points and singular points), ridge orientation estimation etc.

In one case, a research team used Machine Learning to teach the system to distinguish alive and passed away people’s irises, by creating an algorithm and use live and deceased people’s eye database for training. They hit 99% accuracy with only one catch: the person must be deceased at least 16 hours ago.

Artificial Intelligence truly shows itself is in the behavior recognition field, where traditionally more human touch needed for identification. With DL and ML use, creating a human behavior pattern, which provides identification opportunity, becomes available.

AI keeps track of how an individual person strokes the keys, time gap between every stroke and creates a profile for identification.

AI observes the walking pace, speed, G-force of a person and creates a profile for identification.

AI-powered speech recognition systems analyze and interpret human speech to identify individual voice patterns and inflections. These systems utilize advanced neural networks to distinguish nuances in speech, supporting applications that range from voice-activated assistants to accessibility tools for those with speech impairments. The capability to process spoken language accurately—adapting to various accents and noises—enhances user interaction and security, broadening AI’s application in behavior recognition.

AI constantly analyzes the face mimics, micro movements of face muscles to catch certain emotions and create a profile. This use of AI caused the most heated debates and concern about privacy and future of AI, that Microsoft and Google decided to limit its development and implementation. However lack of standards and regulations leads to a shadow development scheme.

At current form, these biometric identification methods can be hacked or tricked by different methods. While AI is helping to improve performance of biometric identification, ill-intentioned people can also use AI tools to hack the biometric systems. In an example, we have seen demonstrations AI created fake fingerprint easily get access from a fingerprint scanner. This is a never-ending race very similar to virus vs. anti-virus softwares. Hackers, criminals might show unlimited motivation to find new methods and loopholes of security systems. For example, McAfee Labs predicted that cybercriminals will increasingly use AI-based evasion techniques during cyberattacks.

Computer scientists at New York University and Michigan State University have trained an artificial neural network to create fake digital fingerprints that can bypass locks on cell phones. The fakes are called “DeepMasterPrints”, and they present a significant security flaw for any device relying on this type of biometric data authentication. After exploiting the weaknesses inherent in the ergonomic needs of cellular devices, DeepMasterPrints were able to imitate over 70% of the fingerprints in a testing database.

Face recognition systems can mistake a criminal for a regular user in case an adversary uses AI tools to analyze original face and create 3D printed masks etc. These are well-known problems in facial recognition systems. The bias may lead to fraud, wrong prosecutions or other notorious events.

The advent of generative AI deepens these challenges, as these systems can now also generate hyper-realistic fake images and audio. With tools like OpenAI’s Sora, the potential to create convincing fake videos is imminent. This advancement could significantly enhance the spread of misinformation, especially in sensitive contexts like elections, where the authenticity of visual and auditory information is crucial. Addressing these vulnerabilities requires not only technological safeguards but also robust policy interventions to mitigate the risks posed by AI-driven media manipulation.

The misuse of voice biometrics increases with their popularity. Attacks against speech recognition can be launched with the use of malicious voice modification such as inserting patterns of white noise in the audio. They can lead to voice impersonation, fraud or fake voice-based content publication.

While biometric methods has vulnerabilities; AI tools which are mostly software have also security flaws.

These software blocks integrated to core systems and can fall prey to attacks targeting AI components. Attackers can also use machine learning and AI to compromise environments by poisoning AI models with inaccurate data. Machine learning and AI models rely on correctly labeled data samples to build accurate and repeatable detection profiles. By introducing hacking files that look similar to data file or by creating patterns of behavior that prove to be false positives, attackers can trick AI models into believing attack behaviors are not posing threat. Attackers can also contaminate AI models by introducing malicious files that AI trainings have labeled as safe.

Liveness Detection: Your Guide to Protecting Biometric Systems

Future looks definitely very exciting with AI and Machine Learning. New AI tools & techniques are going to have very big impact in every aspect of our lives. In biometrics industry, researchers are using AI to improve the accuracy and performance; at the same time developing different biometric methods such as behavior recognition. Governments and organizations must act fast to catch up new developments and introduce regulations, ethic rules to ease the public concerns. Meantime AI Software and Hardware security against hackers is mostly overlooked and depending on attached or implemented core system’s defenses.

.avif)

Use our product finder to pinpoint the ideal product for your needs.